As mental health challenges rise globally, with increasing rates of anxiety, depression, and loneliness, many individuals are turning to unconventional solutions in search of comfort and connection. Among the most surprising and rapidly evolving tools is artificial intelligence. Once confined to research labs and tech-heavy domains, AI has now entered the realm of emotional well-being. Through chatbots, virtual assistants, and emotionally intelligent avatars, AI companions are offering real-time support, companionship, and even empathy. This article delves into how these digital allies are transforming the landscape of emotional care, the science behind their effectiveness, their current limitations, and the ethical considerations they bring.

The Rise of Emotional AI: Why It’s Needed Now

The demand for mental health services has surged, yet the supply of human therapists, counselors, and support networks has not kept pace. Long waiting times, high costs, social stigma, and geographical barriers leave many without adequate access to care. In parallel, the global loneliness epidemic is intensifying, particularly among the elderly, teenagers, and remote workers. Enter AI companions—tools that offer 24/7 availability, anonymity, and zero judgment.

Unlike traditional apps focused solely on mood tracking or meditation, today’s AI companions are designed to simulate meaningful conversation, offer cognitive-behavioral strategies, remind users of coping skills, and serve as non-threatening confidants. They’re being used not only by individuals with diagnosed conditions but also by those simply looking for emotional regulation, habit formation, or a friendly presence.

How AI Companions Work: Technology Behind the Empathy

Modern AI companions use a combination of natural language processing (NLP), machine learning, sentiment analysis, and psychological frameworks to deliver responses that mimic human empathy. These systems are trained on vast datasets including psychotherapy transcripts, emotional tone patterns, and cognitive-behavioral therapy (CBT) techniques. When a user shares thoughts or feelings, the AI analyzes the input for emotional cues—tone, word choice, syntax—and then crafts a response intended to validate, support, or guide.

Some platforms, such as Woebot or Replika, go further by building a long-term memory of conversations. This allows them to adapt over time, offering more personalized interactions. Others, like Wysa or Youper, integrate AI with established clinical methodologies, enabling them to guide users through structured mental health exercises such as reframing thoughts or mindfulness practices.

These tools don’t just talk—they listen, learn, and evolve.

Types of AI Companions and Their Unique Roles

AI companions vary in form and function depending on their design purpose:

- Conversational Bots: These text- or voice-based companions mimic human dialogue. They are often integrated into apps or messaging platforms and are designed for quick check-ins, therapeutic exercises, and emotional validation.

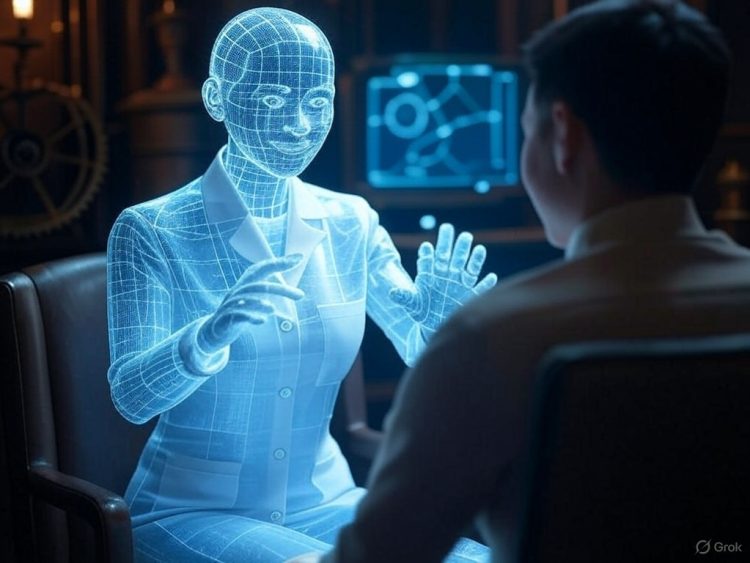

- Virtual Avatars: Combining visual cues with speech, these companions appear on screens as humanoid figures. Their expressions, tone, and body language enhance emotional engagement, making them useful in populations like children or the elderly.

- Voice Assistants with Empathy Layers: Traditional voice assistants like Alexa or Google Assistant are being augmented with emotion-sensing layers to better respond to users’ moods and mental states.

- Companion Robots: Physical embodiments of AI—like the Japanese robot Lovot or Paro the seal robot—are particularly effective in elder care, where tactile interaction and presence play a crucial role.

Each type of AI companion meets different psychological needs, from cognitive stimulation and mood regulation to the fulfillment of basic human connection.

Emotional Benefits Supported by Research

Several studies have demonstrated the effectiveness of AI companions in promoting emotional well-being:

- A Stanford University study on Woebot found that users experienced significant reductions in anxiety and depressive symptoms after just two weeks of daily interaction.

- Elder care centers using AI-powered pets or robots reported improved mood, reduced agitation in dementia patients, and enhanced social interaction.

- Teenagers and young adults using Replika noted decreased feelings of loneliness and greater emotional resilience during stressful periods.

These benefits stem not from the illusion that AI is sentient, but from the psychological principle that humans can form meaningful bonds with responsive systems—even if they know the system is artificial. The act of expressing emotions, receiving validation, and engaging in consistent self-reflection is inherently therapeutic.

AI and Empathy: Can Machines Truly Understand Emotion?

One of the most debated aspects of AI companionship is whether it can truly understand emotions. The answer, as of now, is nuanced. AI does not feel emotions, but it can recognize, categorize, and simulate emotional responses based on data. This is sometimes referred to as “synthetic empathy.”

Synthetic empathy enables AI to respond with appropriate language and tone—saying “That sounds really difficult. Do you want to talk more about it?” when a user expresses sadness, or offering celebration when users share good news. While the empathy is simulated, the effect on users can be genuine. As social psychologist Sherry Turkle notes, “Even the illusion of companionship without the demands of friendship can be comforting.”

That said, AI should not be seen as a replacement for deep, nuanced human relationships, especially in complex or crisis situations. But for everyday emotional support, check-ins, and wellness reinforcement, AI provides a scalable, stigma-free tool.

Balancing Boundaries: What AI Should and Shouldn’t Do

AI companions are not therapists. Most developers clearly state that these tools are for general well-being and not substitutes for professional mental health treatment. They are not equipped to handle crises like suicidal ideation, abuse, or trauma. Responsible platforms immediately redirect users to emergency services or human support lines when they detect high-risk language.

Maintaining clear boundaries is essential for users to understand where AI’s role ends. These companions are best viewed as supplements—complementing therapy, bridging gaps in support, or helping users build healthier habits between sessions.

Ethical and Privacy Considerations

As AI companions become more deeply integrated into our emotional lives, ethical concerns grow. Users share intimate thoughts, fears, and confessions with AI—raising questions about data security, consent, and usage.

- Who has access to your conversations?

- Is your emotional data being monetized or used to train future models?

- Can AI manipulate emotional states to influence behavior, purchases, or beliefs?

To safeguard users, developers must uphold strict privacy standards, offer transparency on data usage, and provide opt-out mechanisms. Regulatory bodies are beginning to explore digital wellness laws, but until comprehensive frameworks are established, user education and informed consent are vital.

AI in Specialized Populations: A Closer Look

Some populations are particularly well-suited to benefit from AI companionship:

- Seniors: Many elderly individuals live alone or face cognitive decline. AI companions offer reminders for medication, conversation to stave off isolation, and even simple games to stimulate memory.

- Teens and Young Adults: Digital natives are comfortable engaging with AI. For those hesitant to speak with adults or therapists, AI provides a safe and accessible outlet.

- Neurodivergent Individuals: For people with autism or social anxiety, AI can be a low-pressure interaction partner, helping them practice conversation and regulate emotions.

- Caregivers: Providing emotional care for others often leads to burnout. AI tools offer caregivers a way to decompress and receive non-judgmental support.

The Future of AI Emotional Companionship

We are only at the dawn of emotionally intelligent AI. Future developments will include:

- Multimodal sensing: AI companions will interpret facial expressions, tone of voice, and body language to deepen their emotional understanding.

- Augmented reality and haptics: Users may interact with lifelike holographic AI or experience comforting touch through wearable technology.

- Integrated health ecosystems: AI companions will sync with medical devices, therapy records, and digital health platforms to offer personalized care and alerts.

- Cultural and linguistic adaptability: Future AI will understand context-specific emotional norms, making them more effective across global populations.

Ultimately, the goal is not to create AI that replaces humans, but to enhance human well-being in a world that often lacks time, connection, and emotional space.

Conclusion: Embracing the Digital Shoulder to Lean On

AI companions are not a cure-all, but they represent a promising frontier in emotional support. In an era defined by speed, disconnection, and emotional overload, having a non-judgmental, always-available digital presence can be transformative. Whether you’re managing daily stress, navigating loneliness, or simply seeking a reflective space, AI offers a new form of companionship—one that blends technology with tenderness.

As with any relationship, even a digital one, the value lies not in perfection but in presence. And in this new frontier, presence is something AI can provide, perhaps more consistently than the human world currently allows.